About me

8/22—8/25

8/22—8/25

I recently graduated with a PhD in EECS from UC Berkeley, focusing on deep learning and reinforcement learning, under the supervision of Alexandre Bayen in Berkeley AI Research (BAIR).

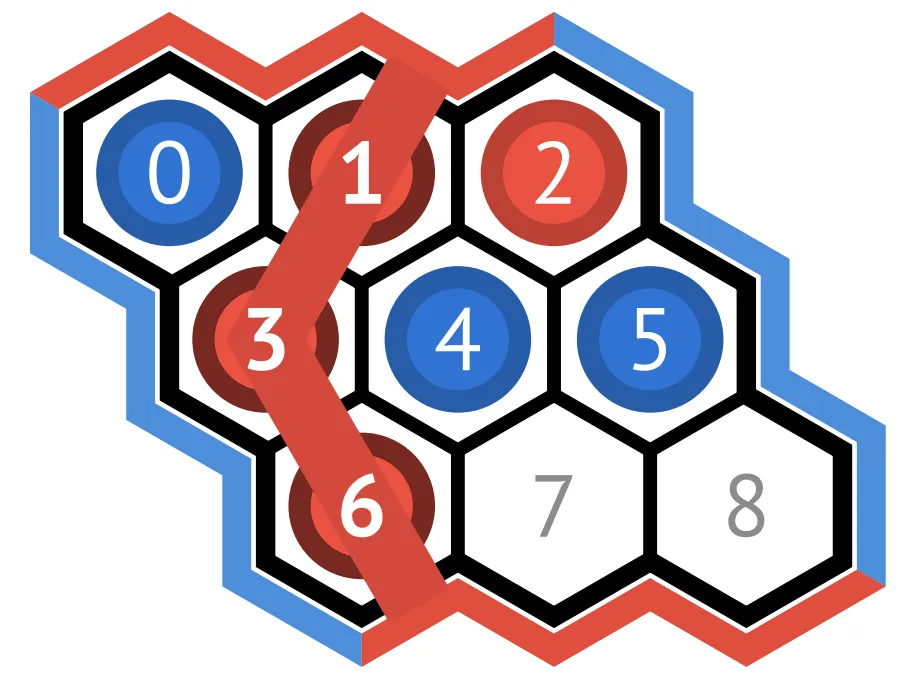

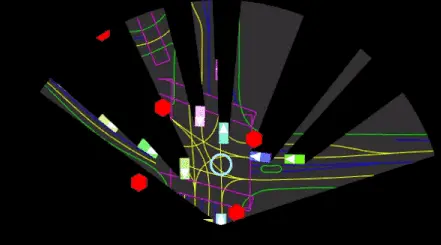

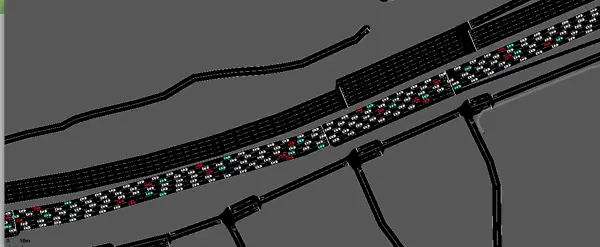

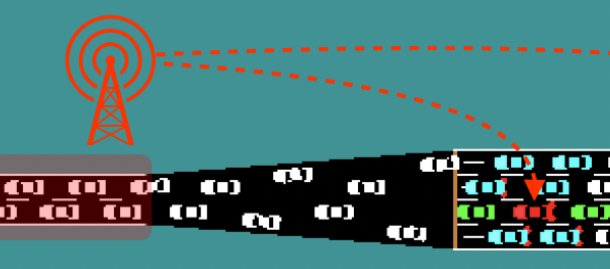

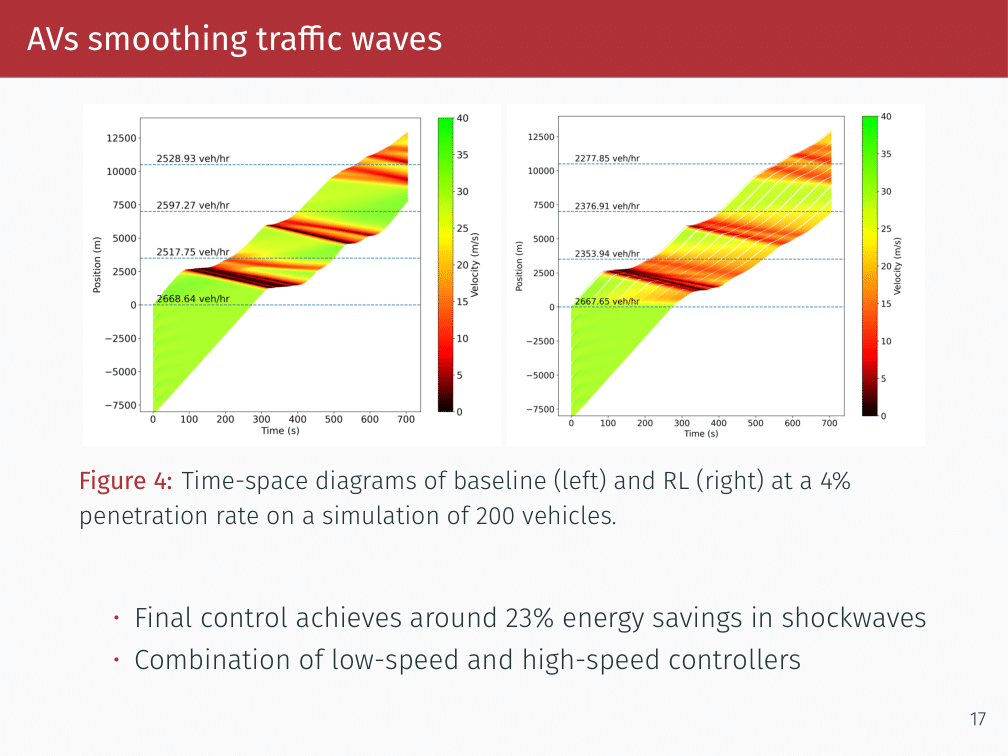

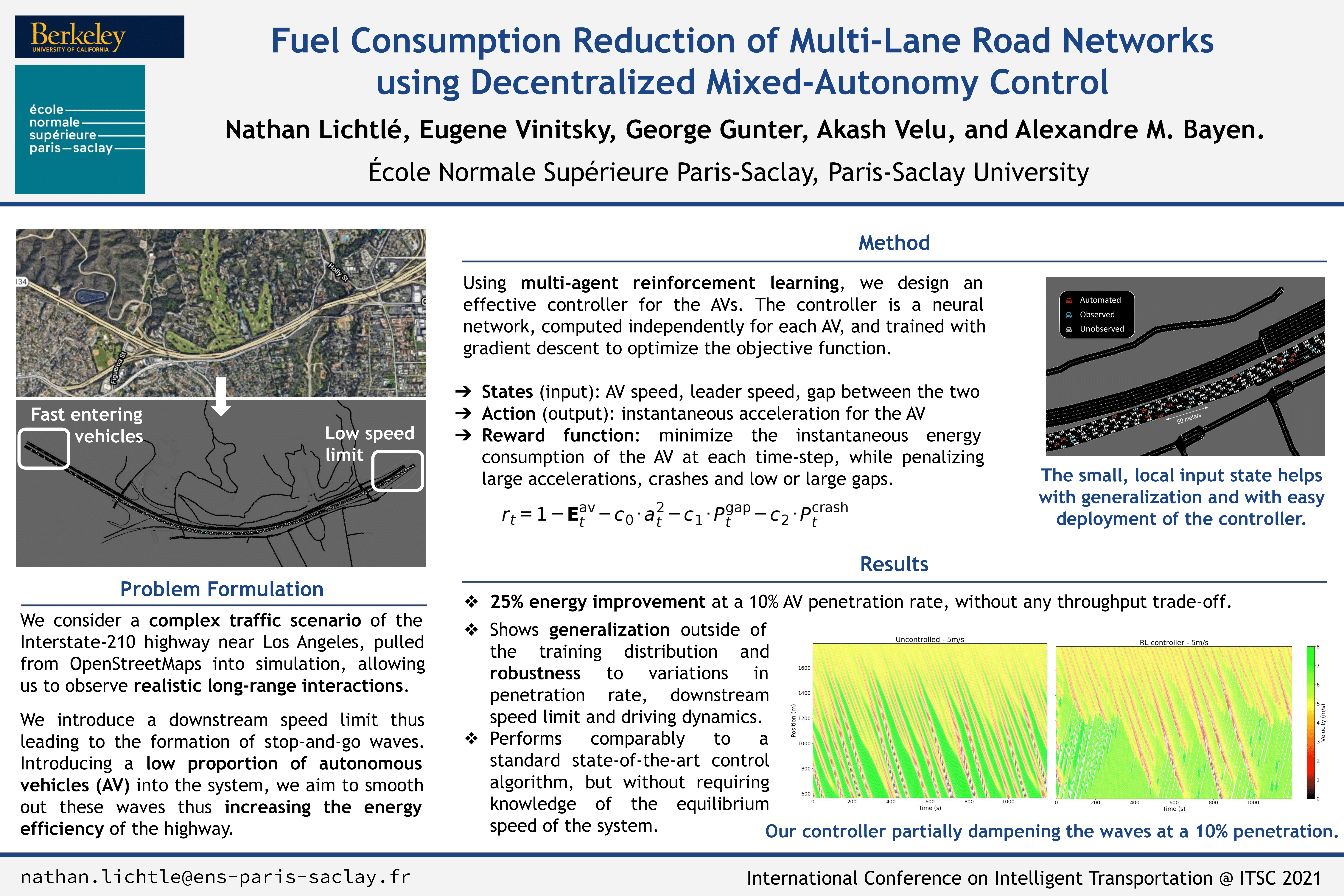

I've worked on RL for autonomous driving and traffic optimization, culminating in CIRCLES, the largest traffic smoothing field test to date, where I designed and trained RL agents to control 100 autonomous vehicles in live highway traffic during rush-hour. More broadly, I've applied AI to control multi-agent systems, built fast data-driven simulators for RL, and integrated PDE-inspired models with neural networks for accurate long-term sequential traffic forecasting. I am also interested the potential of language models in control systems.

I've worked on RL for autonomous driving and traffic optimization, culminating in CIRCLES, the largest traffic smoothing field test to date, where I designed and trained RL agents to control 100 autonomous vehicles in live highway traffic during rush-hour. More broadly, I've applied AI to control multi-agent systems, built fast data-driven simulators for RL, and integrated PDE-inspired models with neural networks for accurate long-term sequential traffic forecasting. I am also interested the potential of language models in control systems.

10/21—12/24

10/21—12/24

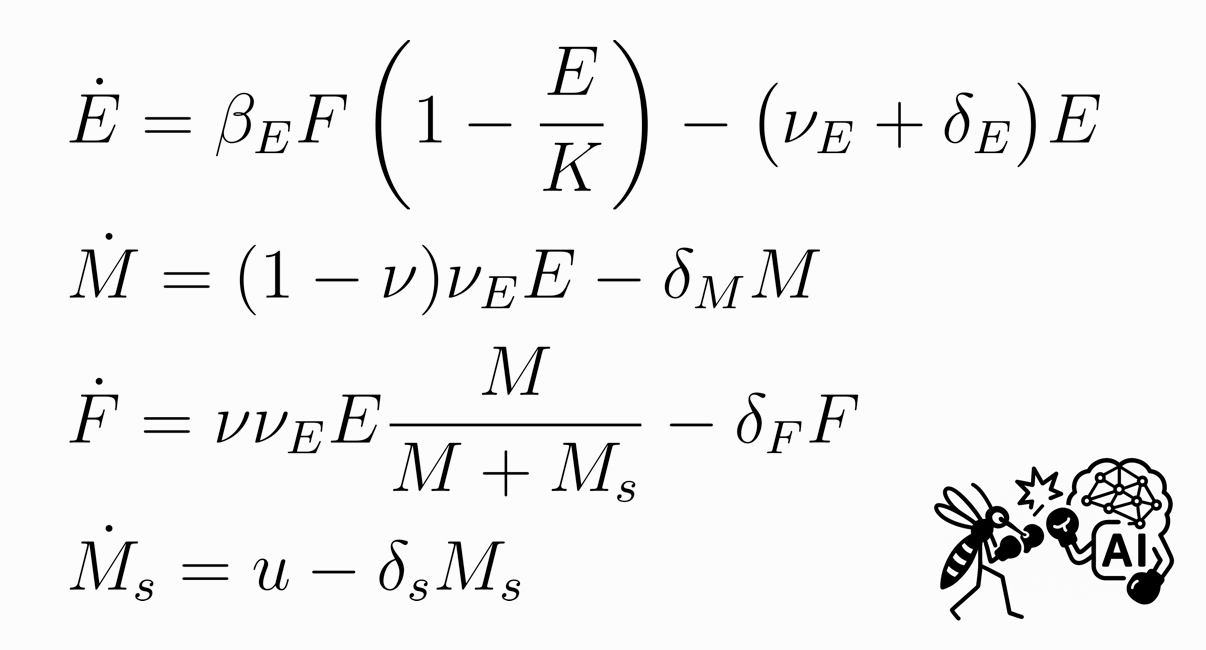

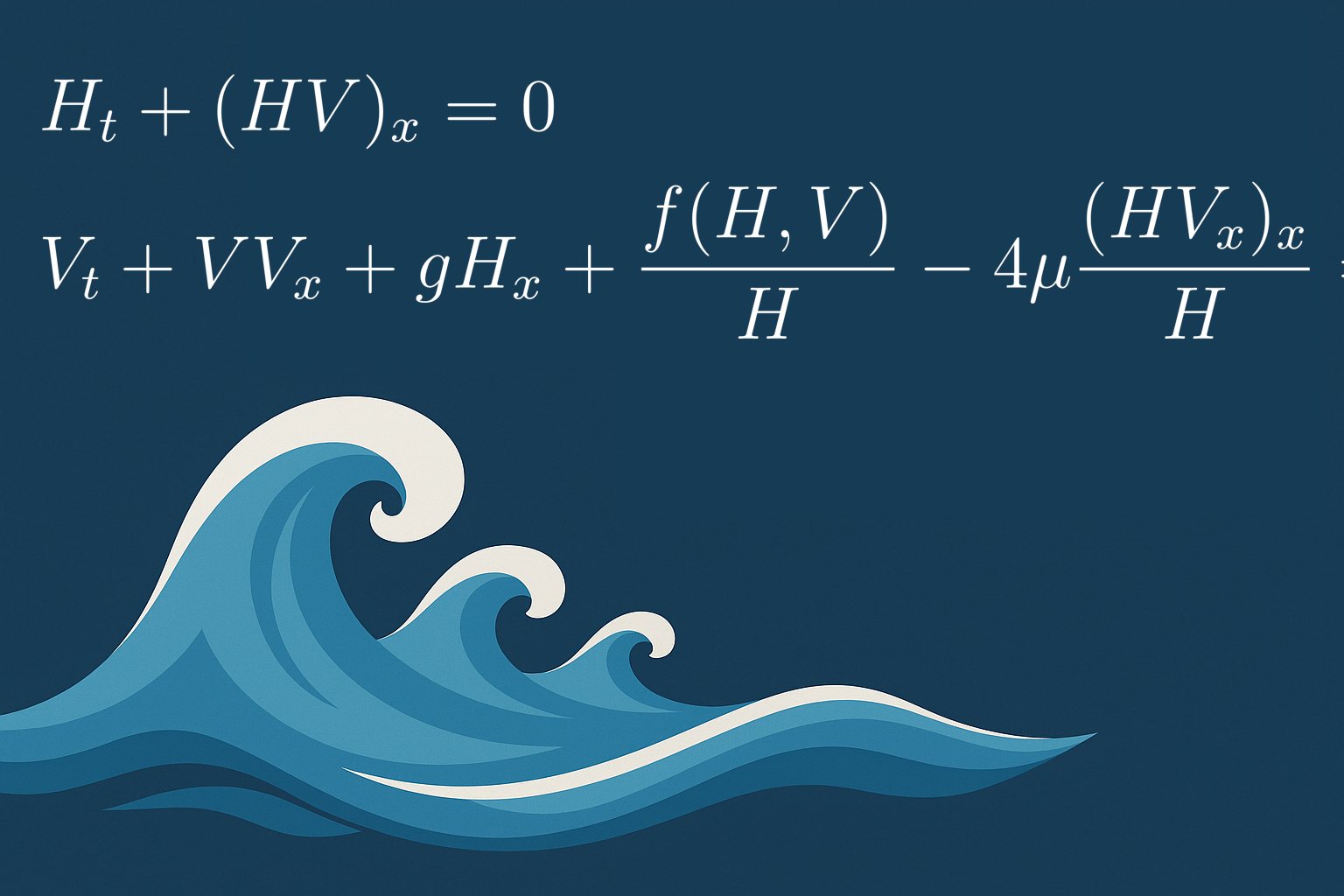

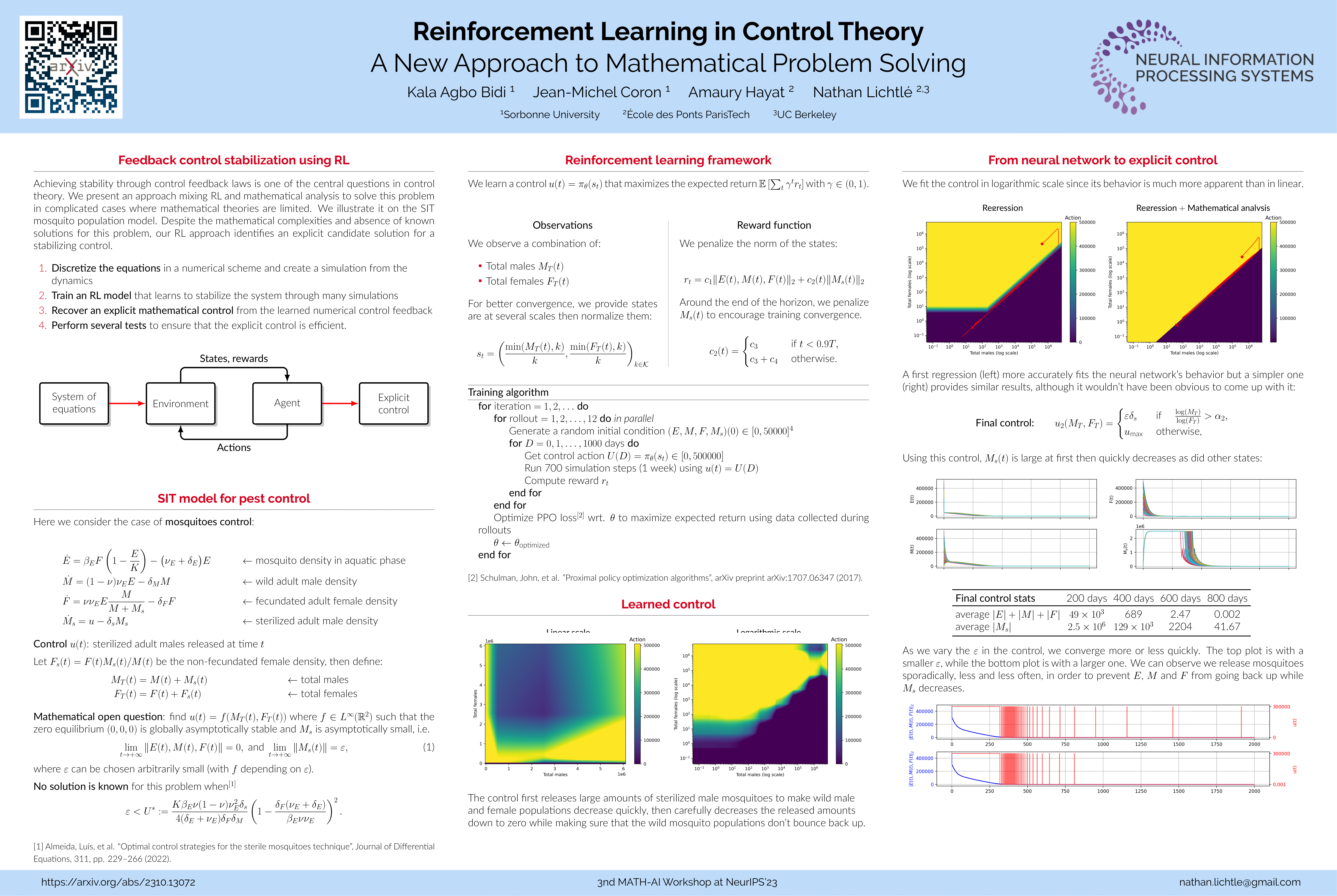

Joint PhD with Amaury Hayat at École nationale des ponts et chaussées, Institut Polytechnique de Paris in the CERMICS research center, where I worked on reinforcement learning, control and partial differential equations.

9/17—8/21

9/17—8/21

Previously, I completed my B.S. and M.S. (MVA Master) at École Normale Supérieure (ENS) Paris-Saclay, in the CS department.